Capture Audio with Websockets and Call Streams

This guide will use <stream> and websockets to receive base64 audio, which can be written to an audio file for storage, playback, or further manipulation such as transcription services. This guide will focus on taking inbound and outbound audio tracks from a call and saving them to a Wave file.

What do I need?

If you are running Python 3.10, the only package you should have to install is PyWav. If you are running older versions of Python, you may also have to install websockets / asyncio

Additionally for this guide, and testing purposes we will use ngrok to expose our port to the internet.

Finally, you can check out the full code on github

How to run the application

To run the application, simply run app.py, and create an ngrok tunnel for appropriate port. By default this will be port 5000.

You may need to use an SSH tunnel for testing this code if running on your local machine. – we recommend ngrok. You can learn more about how to use ngrok here.

Code Walkthrough

Within the github you will find two files, the app.py file, and this readme.md.

Handling incoming Websocket Messages

First we will set up some variables for later use. inboundAudio and outboundAudio are lists that will contain our base64 audio payloads.

saveInbound and saveOutbound are booleans that will determine whether or not we write the audio payloads to a wave file later in our script.

async def audioComp(websocket):

inboundAudio=[]

outboundAudio=[]

saveInbound=False

saveOutbound=True

Now we will create a try:except block to handle the messages from our websocket connection.

This try:except just catches closed connections, as we do not always recieve a close message from SignalWire.

Then we can use asyncio to create a non-blocking for loop that iterates over the messages we receive from the websocket. Each message will be in the form of a json string, so we will use json.loads to bring our data back to a more manageable format.

Finally, we will parse our messages based on their event designation.

The start event provides details such as the callSid, streamSid, the tracks we can expect, and more. We will only use the callSid to name and organize our recordings.

A full example of what a start even returns can be found in our <stream> documentation here.

The media events hold the base64-encoded audio that we will use to compile our wave files. We can do this by decoding the base64 and appending the decoded payload to the appropriate list.

try:

async for message in websocket:

msg=json.loads(message)

if msg['event'] == 'start':

callId = msg['event']['callSid']

if msg['event'] == 'media':

media = msg['media']

if media['track'] == 'inbound':

inboundAudio.append(base64.b64decode(media['payload']))

if media['track'] == 'outbound':

outboundAudio.append(base64.b64decode(media['payload']))

The last event we listen for is the stop event. When we receive this stop, we will join our array of bytes,

and compile each track into its own .wav file using the pywav library.

In some cases we may only want to receive/handle a certain track, which is why we use the saveInbound and saveOutbound variables to toggle them on or off.

By default, the audio files will save as Inbound- and Outbound- followed by the callSID.

Example of the file names

if msg['event']=='stop':

print('recieved stop, writing audio')

if saveInbound==True:

inbound_bytes = b"".join(inboundAudio)

wave_write = pywav.WavWrite("Inbound-"+callId+".wav", 1, 8000, 8, 7)

wave_write.write(inbound_bytes)

wave_write.close()

if saveOutbound==True:

outbound_bytes = b"".join(outboundAudio)

wave_write = pywav.WavWrite("Outbound-"+callId+".wav", 1, 8000, 8, 7)

wave_write.write(outbound_bytes)

wave_write.close()

Finally, we close our try:except by ensuring we do not error out when our websocket closes without the appropriate message

except websockets.ConnectionClosed:

print('connection ended')

Serving our Websocket Server

To serve our websocket server we can just make an async function called main, use the websockets package to serve our websocket server.

websockets.serve takes three arguments (for our use), which will be the function we use to handle the messages, the host, and the port.

Finally, we can serve this server indefinitely by using await asyncio.Future(), and then by calling this function with asyncio.run(main)

async def main():

async with websockets.serve(audioComp,'localhost',5000):

await asyncio.Future()

asyncio.run(main())

Testing The Application

Now that we understand how the application runs, we can use ngrok, and an XML bin to test it out!

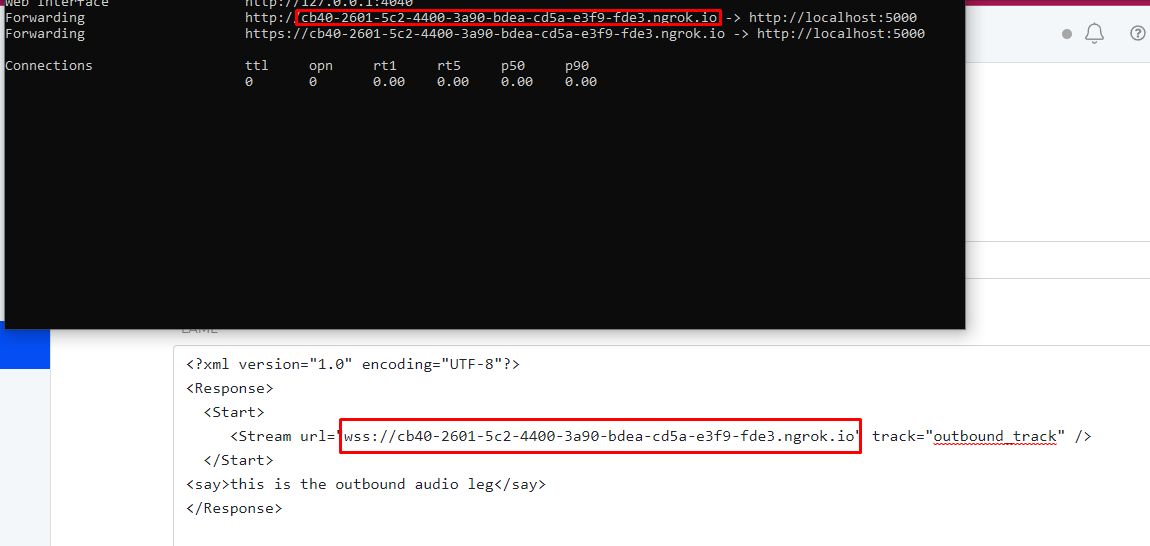

Set up your ngrok tunnel

By default, our websocket will run on localhost:5000, so once ngrok is installed we can use ngrok http 5000 to create a new tunnel, and grab the URL.

This url will also be used for setting up our XML bin below.

Set up an XML bin

Now we will set our <Stream> url to the ngrok tunnel we just created, replacing the http with wss.

Here we can also specify which track we would like to stream to our websocket. By default we will stream the inbound audio (the caller's audio into SignalWire), but here we can set track to the outbound_track

Doing this will allow us to hear the opposite leg of the call, such as the audio from SignalWire, or the opposite leg of a call connected with the <dial> verb.

<?xml version="1.0" encoding="UTF-8"?>

<Response>

<Start>

<Stream url="wss://8dd4-2601-5c2-4400-3a90-bdea-cd5a-e3f9-fde3.ngrok.io" track="outbound_track" />

</Start>

<say>Congratulations, this is the outbound audio stream</say>

</Response>

If all is well, you should have a recording that mirrors our <say> statement. in the Outbound- .wav audio file.

Due to the async nature of this set-up, it is entirely possible to create both an inbound, and outbound stream and capture both at the same time! You can simply add an additional <Stream> verb to your XML bin which will allow you to have independent recordings of both audio tracks.

Wrap-Up

This guide just show off one of many ways you can use Websockets and SignalWire's Stream functionality to receive handle audio directly from the call, without having worry about storing the recordings on SignalWire and ingesting them to a private server at a later time. This could also potentially be used for some neat accessibility features such as real-time transcription for users who may have difficulties with auditory processing.

Required Resources:

Sign Up Here

If you would like to test this example out, create a SignalWire account and Space.

Please feel free to reach out to us on our Community Discord or create a Support ticket if you need guidance!